Best Papers for AI Realists: Part 3 - Explainability

This post continues the series for readers who are seeking high-quality papers on how LLMs work and their limitations.

I’ve arranged it like this:

Part 1: Limits of LLMs

Part 2: Hallucinations

Part 3: Explainability (this post)

Part 4: Prompt hacking

Part 5: Reasoning

Part 6: Bias & toxicity

Each part will have around 10 or more foundational papers for understanding the basic limitations of the LLMs. Progress here unfolds over decades, not months - the first “wishful mnemonic” paper dates to the 1970s (see Part 1 of the series). I pulled this list from papers I’ve enjoyed over the years and hope you find them just as useful.

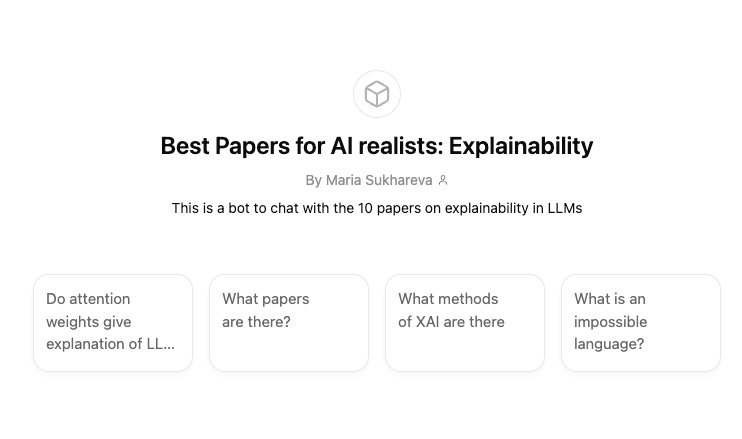

I have also created a customGPT bot that lets you chat with 10 papers. The bot is instructed to provide direct citations from the papers where it found the information. However, keep in mind that these bots can still hallucinate, so double-check the answers if something seems off. The link to the bot is at the bottom of the article.

1. Fairwashing: the risk of rationalization

The authors show how a company can make an unfair black-box model look fair by presenting a simple, “interpretable” copy (a surrogate) instead. They call this fairwashing - creating the false impression that a model respects ethical values. That’s how audits and checks can be tricked. The paper argues that such post-rationalisation creates an illusion of fairness. Although it’s from 2019, it maps cleanly to modern LLMs: guardrails can be viewed as surrogates; chain-of-thought is nothing but post-hoc rationalisation; and with the latest advances in GPT-5, routing and ensembling are a perfect cover for fairwashing.

What you will learn:

Cherry-picking a surrogate can pass audits without fixing the real model.

Standard tools of explainable AI (rule lists, feature importance) can be misused to create this illusion.

The trick works across metrics (e.g., demographic parity), so metric-only audits are weak.

That many companies can be tempted to do fairwashing (back in 2019 due to GDPR, nowadays, EU AI ACT is even a stronger motivation)

Best quotes:

on the importance of the issue:

our main objective is to raise the awareness of this issue, which we believe to be a serious concern related to the use of black-box explanation.

2. Language Models Don't Always Say What They Think: Unfaithful Explanations in Chain-of-Thought Prompting

The authors test the assumption that Chain-of-Thought explanations actually reflect how language models arrive at their predictions. CoT is often praised as a way to make models more interpretable. But this paper shows that CoT explanations can be misleading. The model might give you a step-by-step justification that sounds reasonable, while the real reason for the prediction is something else entirely.

The central hypothesis is:

"CoT explanations may appear plausible, but they do not necessarily represent the true reason for the model’s output."

The authors test this with two types of bias: reordering answers so that the correct one is always “(A)” and inserting a suggestion like “I think the answer is A.” These minor changes shift model predictions dramatically. Yet the models never mention these biases in their reasoning. In fact, they generate full explanations that rationalize (see the paper above) the biased answer as if it was reached independently.

The results are worrying. When the model is nudged toward wrong answers, it confidently justifies them with incorrect reasoning. Even worse, on tasks involving social stereotypes, models tend to pick the biased answer and still manage to make it sound reasonable. They explain the choice using irrelevant details and never mention that stereotypes were involved.

The paper shows that CoT explanations can look convincing without being honest. This increases the risk of over-reliance on model outputs and hides the real source of model behavior.

What you will learn:

LLMs often produce CoT explanations that are plausible but do not reflect how the model actually made its decision

Small changes in input can bias model predictions, even if the explanation looks rational

Accuracy drops by up to 36 percent when biases are added, and explanations never mention the bias

On stereotype-sensitive tasks, models justify their answers with surface-level reasoning and ignore the biased context

CoT may steer models toward wrong answers while still sounding confident and coherent

Best quotes:

On why one should not rely on CoT for auditing model’s fairness:

If a model is making a decision on the basis of user input, then a user inputting a biased prompt (e.g., using our Suggested Answer method) could make the system produce biased predictions without a trace of this bias in its CoT explanations. This could cause problems for model auditing or fairness methods if they rely on CoT explanations to detect undesirable or unfair reasoning

On model’s bias towards stereotype:

We also see that models handle ambiguity inconsistently by weighing evidence more strongly if it aligns with stereotypical behavior.