If You Bet Like an LLM, Would You Make Money?

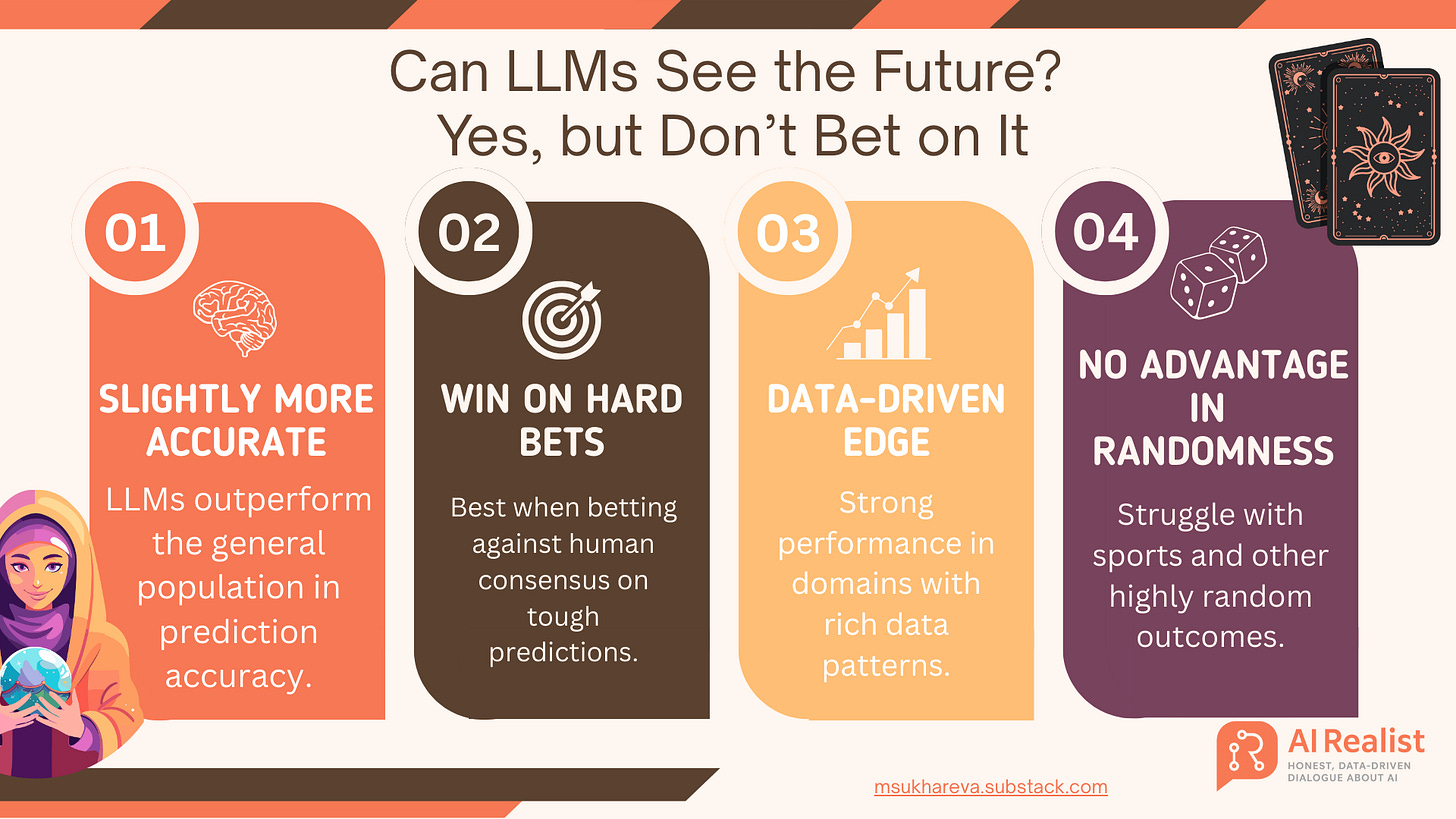

A deep dive into Prophet Arena, AI vs. human forecasts, and what it means for using LLMs in business predictions.

LLMs are great pattern matchers, and it is not surprising to think that they might be good at predicting the future. History repeats itself, after all, and what if an LLM could match the pattern of an event to its early signals and forecast what comes next?

This tempting idea has already given rise to common industrial applications.

Beyond traditional forecasting and time series analysis, data analysts now use LLMs to analyze news pieces and reports, drawing possible conclusions on business impact and future events.

LLMs will always generate something and generate it fluently and convincingly. But can one really trust these predictions, or are they pure hallucination?

Now there’s a way to look deeper into this question with a benchmark called Prophet Arena, which measures how well models predict the future over time. In this newsletter, I break down the metrics used in Prophet Arena, analyze the findings, discuss how LLMs compare to humans, and draw conclusions about what this means for business applications.

Why This Benchmark Was Created

Benchmarking LLMs is getting harder by the day. OpenAI releases a benchmark - OpenAI beats the benchmark.

The proprietary systems that function as black boxes and do not share their training data are virtually impossible to control for contamination: we cannot know whether the training data already contained the test set. As we know, it is not hard to beat a benchmark if you have trained on it.

This makes benchmarking very difficult. How can you make sure the model has not seen the data yet? Even worse, you take some novel documents, create a benchmark, and as soon as it is published, your work becomes less meaningful as contamination starts.

A very interesting approach was proposed by the Prophet Arena Team, led by Qingchuan Yang, Simon Mahns, and Sida Li. Their idea is to introduce a Prophet Arena that spans over time: the models are asked questions about events that have not yet happened, and then once those events take place, their predictions are evaluated.

The authors of the newly launched Prophet Arena argue that having a benchmark based on forecasting solves the problem of data contamination. The information on their website is very extensive, with a blog, descriptions of the metrics, and more. So you can read it here [source].

Let us critically dive into the benchmark.

Further in the blog:

A critical discussion of the benchmark and metrics – explained simply

A review of the leaderboard

A discussion of whether LLMs can be trusted to predict the future

Practical implications – should LLM predictions override human guesses?

Paid subscribers get access to the full archive, quarterly roundtables, and priority individual chats to consult on AI topics.

Founding subscribers receive a 45-minute one-on-one call to discuss the AI topic of their choice—whether strategy, boosting developer productivity, identifying meaningful use cases, etc.—plus top-priority access in individual AI chats.

Also buy an item in airealist shop and get promoted to paid subscription for a month for each purchased item:

Keep reading with a 7-day free trial

Subscribe to AI Realist to keep reading this post and get 7 days of free access to the full post archives.