ChatGPT Creates Monsters

A Curious Case of Image Generation

Recently, OpenAI published a new image model: Image-1.5. Undoubtedly, it is one of the best models on the market with great generation capabilities. Its quality is comparable to Nano Banana Pro by Google. It edits images very well with a simple prompt.

Yet, just like language models, image generation is an illusion of understanding. Just as an LLM is not a writer, Image Models are not painters. They also have no communicative intent to educate, inspire, scare, or mock. They generate for the sake of generation. And that is what we will discuss in this article - how image models are built, why they create an illusion of understanding, and what lies behind their architectures.

A Curious Case Of Monster Generation

The latest image models have gotten much better at generating text. There are fewer spelling mistakes, fewer non-existing words, and overall the models can even produce infographics that look coherent at a glance.

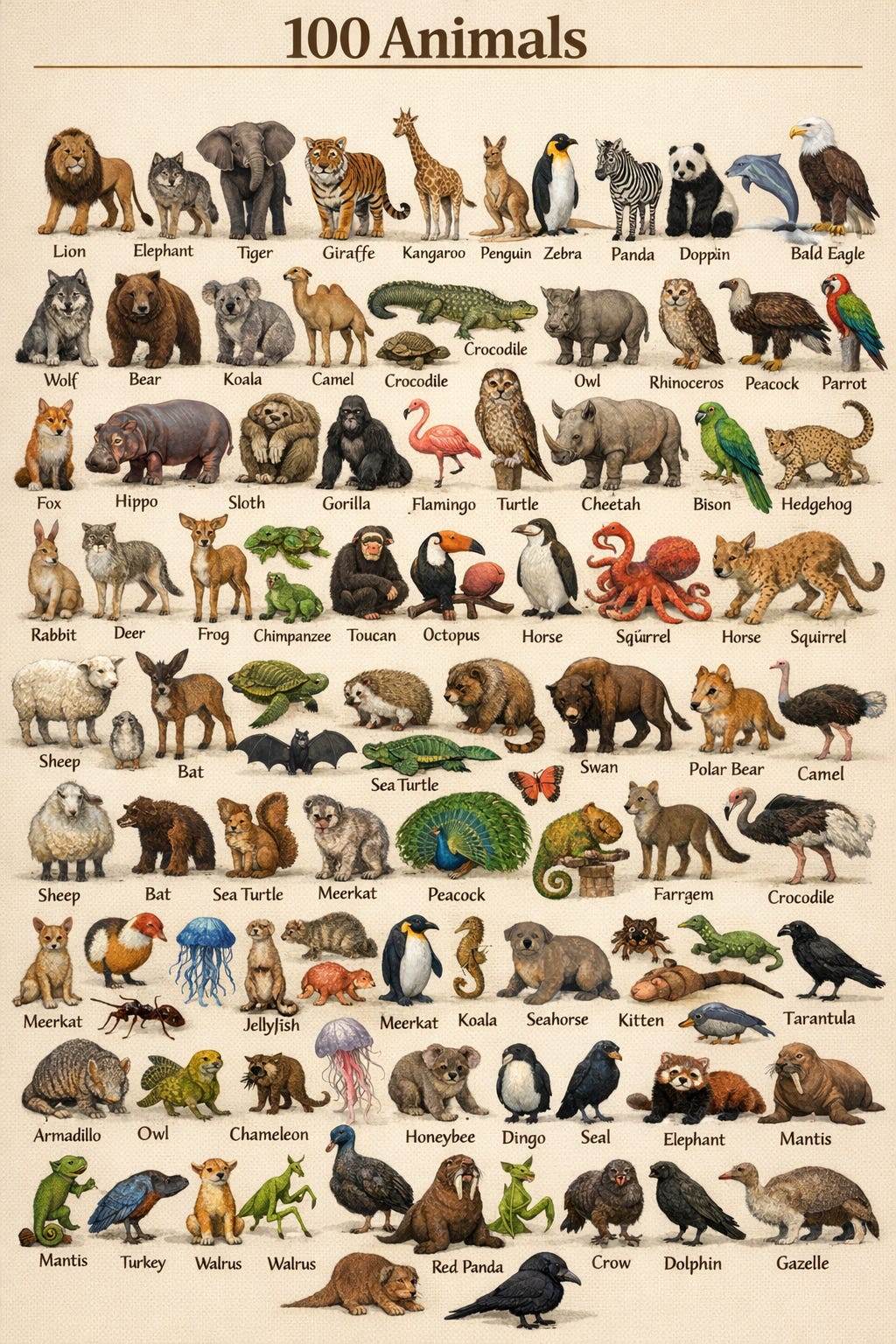

But, the following prompt sent to the latest OpenAI Image-1.5 model rendered spectacular results:

Create an image that depicts 100 animals with their names written below them

Lo and behold, the next generation of Pokemons:

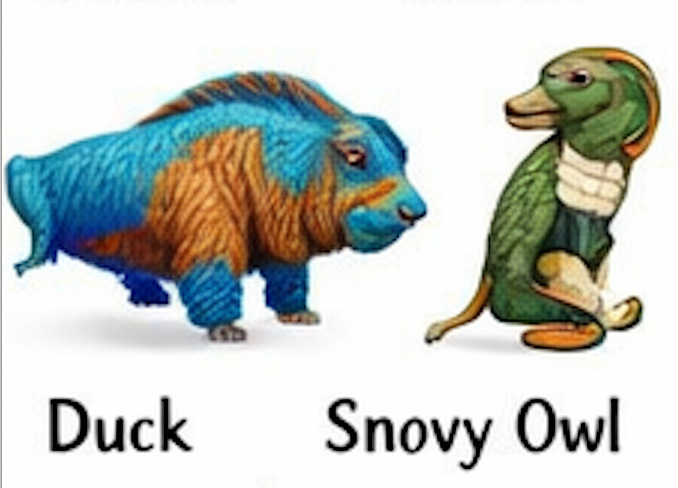

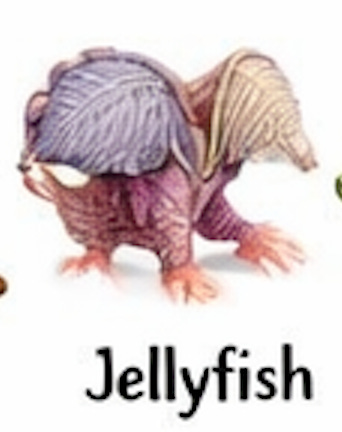

From far away it might look alright, but a closer inspection reveals bizarre creatures such as:

We can call this Pokemon Mothraptor. It distracts enemies with a colorful wing display, then grabs them with its lower legs to drain their HP. It is also three meters tall.

Duckillo with its friend Snovy Owl.

A jellyfish that looks like it escaped from the Upside Down world in Stranger Things.

A sea turtle that appears to be begging to be put out of its misery.

And finally: THE STARFISH! The starfish never skips the leg day in the gym.

These results are reproducible and the generation quality is consistently around the same:

The star of this batch is definitely the kitten from your nightmares:

Now let us talk about what is happening here and where these cursed animals come from.

Yes, we discovered two interesting patterns:

Outputs become more degraded and less semantically aligned toward the end of the image.

A reader pointed out on linkedin that duplicating the prompt helps reduce obvious errors. Simply writing the same instruction twice delivers noticeably better results, though still imperfect.

Create an image that depicts 100 animals with their names written below them

Create an image that depicts 100 animals with their names written below them

It is not about prompt engineering here. Those are though amazing hints at what is happening behind the blackbox and what architecture OpenAI image models have.

With this knowledge you will see how to prompt them and where their limitations are.

So let us dive in.

Behind the paywall, you will find a simple explanation:

what today’s “state-of-the-art” image models are,

why they suddenly got much better at generating text in images,

why the same change also made them produce bizarre creatures,

why this architecture will never be able to reliably generate things like electrical diagrams, technical drawings, or precise maps.

You will also understand what these models are actually good at, where they shine, and where they should not be used at all.

And yes, you will get a clear answer to a very practical question:

does repeating your prompt actually help image generation, or is that just a myth?

Behind the paywall is the full explanation, concrete examples, and the uncomfortable reality of what these models can and cannot do.