Continual Learning: The Next Era of Domain Adaptation

Why Titan Memories might finally put an end to the hallucinating RAG bot.

Right after ChatGPT came out, many started asking the question: “How can we add our own data to the model?” The model was an inference-only API with no alternatives - no fine-tuning, no LoRAs, no open-weight models, nothing at all.

Unsurprisingly, a lot of people remembered the idea of providing search results to the model as a part of the prompt.

When I first heard about this, my reaction was that it sounded really dumb, but at the same time, there was nothing better, so we had to do this silly thing. I hoped that it was a temporary workaround until a better approach came out.

However, as time passed, this approach became state-of-the-art. It was known before and had fancy name: Retrieval Augmented Generation (RAG). It created an illusion of mitigating hallucinations and effective domain adaptation while, in fact, being highly unstable and paving the path for hallucinations that are simply hard to spot.

Three years later, it looks like we finally have hope to stop building hallucinating RAG bots. There is now a method of domain adaptation that utilizes native deep learning methods, benefits from the very core of the transformer architecture: attention mechanisms and is both computationally efficient and trainable.

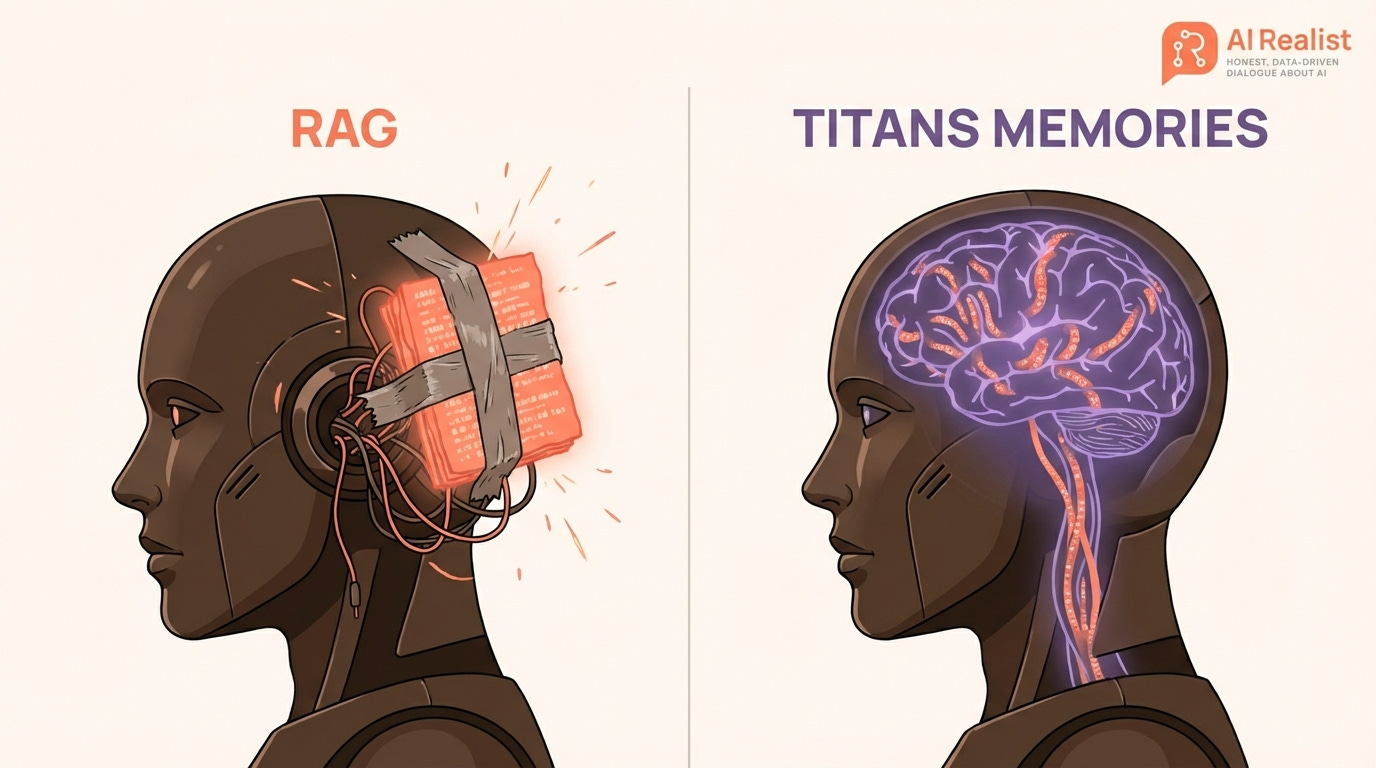

I am talking about the latest breakthrough in continual learning: Titan memories. This might essentially be opening up a new era of domain adaptation for transformers, moving beyond fine-tuning (which suffers from catastrophic forgetting) and RAG (which is just an architectural abomination).

Why RAG is bad

RAG is a fantastic way to make models hallucinate. From one point of view, it does reduce hallucinations, but on the other, if it starts hallucinating, it does its best to ground the nonsense in your data so that you cannot immediately tell it is nonsense. This phenomenon is referred to as grounded hallucination, where the model generates factually incorrect content that appears consistent with the provided source material.

Upgrade your subscription to support this newsletter!

Also, check out our website for services, training, advisory etc:

Paid subscribers get:

Priority answers to your messages within 48-hours

Access to deep dives on the latest state-of-the-art in AI

Founding members:

A 45-minute one-on-one call with me

High-priority personal chat where I quickly reply to your questions within 24-hour

Support independent research and AI opinions that don’t follow the hype.

— or check out the shop