GenAI Fails to Pay Off

Analysis of the MIT Study: Soundness, Feasibility, and Implications

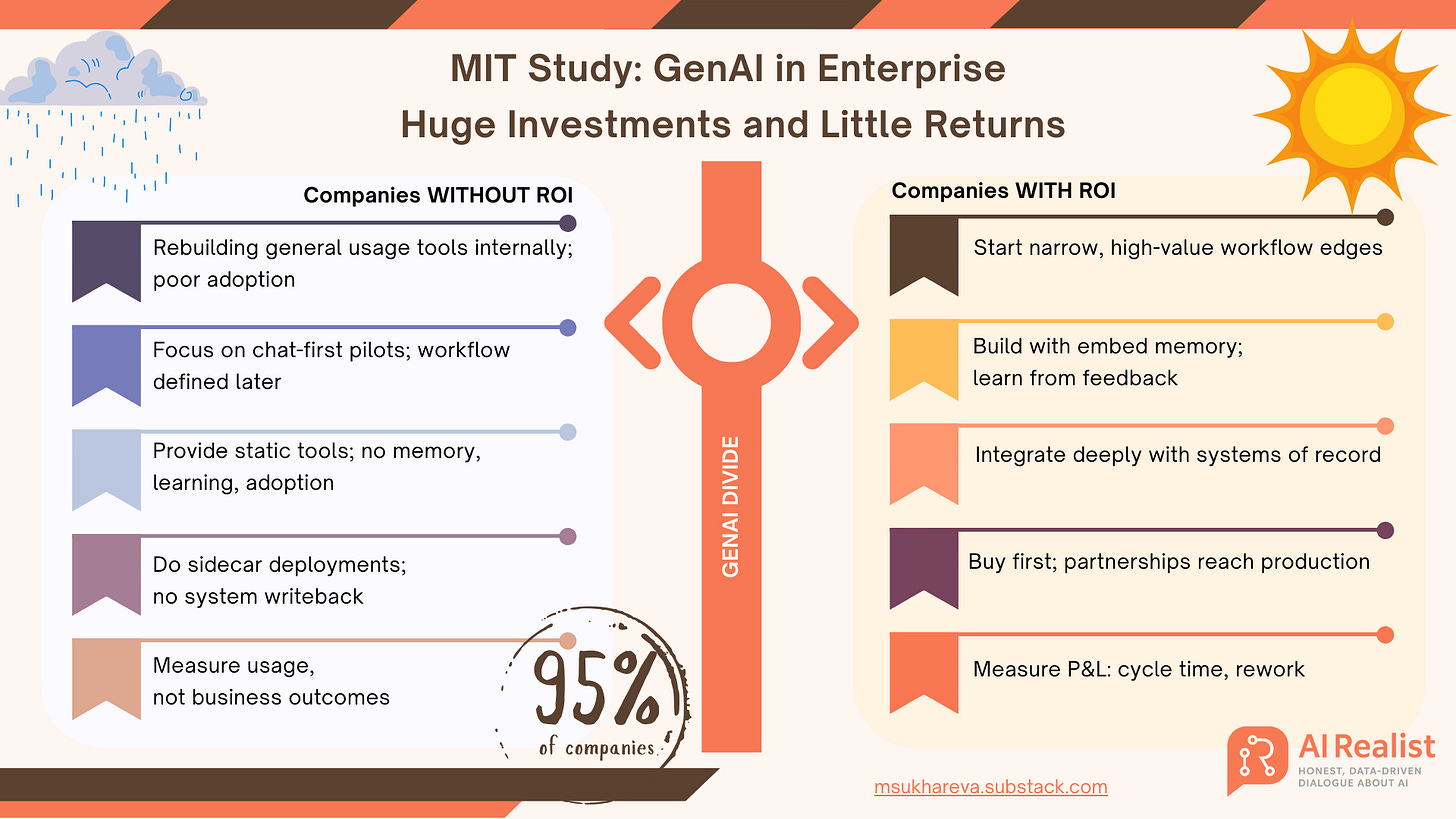

The report by MIT “The GenAI Divide: STATE OF AI IN BUSINESS 2025“ made a lot of headlines. Most of them say 95% of organizations are getting zero ROI from Generative AI.

The report also puts enterprise GenAI spend at $30–40 billion.

I have to say I’m not surprised at all.

For the past two years I’ve said this was likely: almost all AI applications are “nice to have,” far from a company’s core processes. GenAI adoption mostly meant building an army of hallucinating RAG bots for every FAQ, reinventing the wheel with in-house models, rebuilding the ChatGPT website, or integrating GenAI at random e.g. forcing agents into poorly formalisable processes. Here are some selected articles on the topic:

But let’s talk about the report itself. How grounded is it? Or is this just another hype drop—now that AI is falling out of grace and the negative-about-AI train is gaining momentum, much like the AGI hype did some time ago?

Who Wrote the Report

The report is authored by Aditya Challapally, Chris Pease, Ramesh Raskar, and Pradyumna Chari as a reviewer, and is branded MIT NANDA. It’s explicitly labeled as Preliminary Findings from AI Implementation Research from Project NANDA with methods, review credits, and a disclaimer that views are the authors’ own:

The views expressed in this report are solely those of the authors and reviewers and do not reflect the positions of any affiliated employers.

NANDA stands for Networked Agents And Decentralized Architecture. It is an MIT initiative led out of the Media Lab that’s pushing infrastructure for an “Internet of AI Agents.” They advocate for interoperable, learning agents, often discussed alongside Model Context Protocol and Agent-to-Agent standards. The report is framed to support NANDA’s agenda and puts emphasis on the need for agent-based systems. It positions their approach in the same paradigm as MCP and A2A, arguing that for GenAI to succeed, a common standard must be adopted, ideally theirs.

Nothing criminal so far: a group of researchers identifies a problem - poor adoption and low impact of GenAI - and proposes a solution - their standard. Let’s now look at their methodology, conclusions, and the proposed standard to see if it actually holds up.

Further in the article:

Evaluation of study’s methodology: sound but with significant limitations.

Why users prefer general tools over specialised solutions and how Shadow AI can boost productivity more than enterprise offerings.

The GenAI divide: 95% of organisations are on the wrong side and what caused it.

Solutions to the GenAI divide.

Keep reading with a 7-day free trial

Subscribe to AI Realist to keep reading this post and get 7 days of free access to the full post archives.