Is Internet Content Mostly Generated By AI?

How Can We Tell What’s Human?

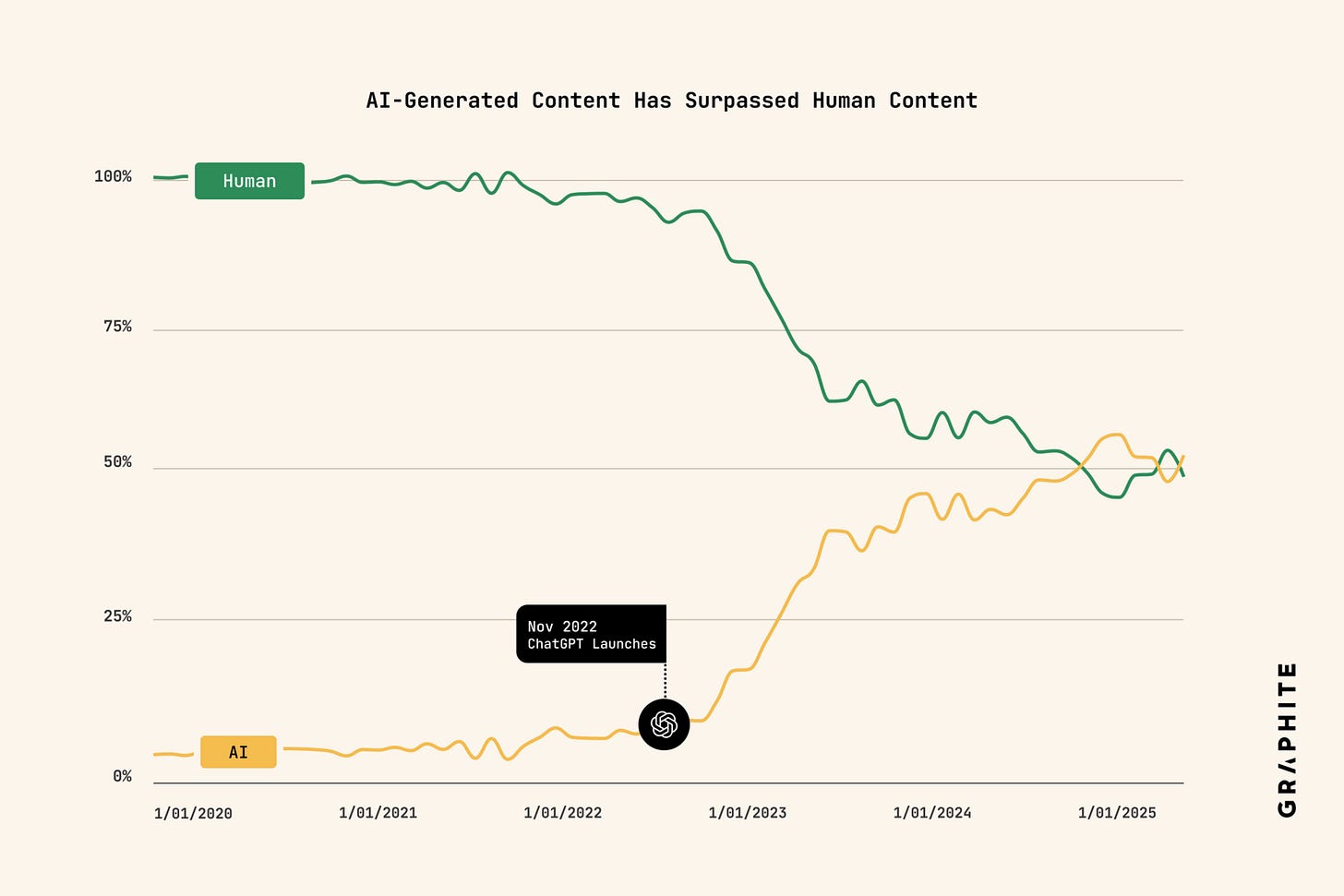

A few days ago, this graph went viral. Many people reposted it, expressing concern about the growing amount of AI-generated text. Does this mean the internet is now filled with AI slop? That human writers are no longer needed? Not so fast. Let’s first break this study down.

In this newsletter, I argue that although the study includes an interesting graph, it has substantial flaws and is by no means conclusive about whether AI-generated content has surpassed human content or to what degree.

The Reliability of Graphite Study

Let us first examine the original study and how reliable it is.

It was published by Graphite. Graphite is a relatively small consulting company (around 200 employees) that focuses on SEO and so-called AEO (Answer Engine Optimization). Their main product is an AI tool that helps optimize content to be discovered by search engines and LLMs.

My first question when I saw this chart was:

how do you know which content is AI-generated?

While there are certain “ChatGPT-isms” out there, the current state-of-the-art detection tools do not ensure high accuracy in their predictions.

The second question I had was:

this chart completely ignores a large subset of articles - those written by humans but proofread or rewritten by AI.

These types of articles may be almost indistinguishable from AI-written ones.

So, let us review the paper and see whether they address these topics.

They chose to use a tool called SurferAI to determine whether an article was written by a human or AI. The articles were split into segments of 500 words, and the final label was assigned based on a majority vote across the segments.

To evaluate SurferAI’s performance, the authors crawled a collection of older articles written before ChatGPT’s release and labeled them with SurferAI. They also generated multiple texts with ChatGPT and measured the accuracy on this subset.

For human-written articles, the proportion of falsely labeled samples was 4.2%, while for AI-written ones, it was only 0.6%.

Criticism of the approach

I’ll jump in and point out the following: SurferAI does not disclose its training data. The authors of the Graphite study used CommonCrawl articles written before November 2022 to test the tool. There is a high chance these articles were also part of SurferAI’s training data, and its reported high accuracy (95.8%) could be explained by testing on training data. This cannot be ruled out.

Later in the article: a deeper critique of the study, an overview of related peer-reviewed research on how well AI detectors perform, and a list of the best AI detectors to try out (both free and commercial).

Paid subscribers get access to the full archive, quarterly roundtables, and priority individual chats to consult on AI topics.

Founding subscribers receive a 45-minute one-on-one call to discuss the AI topic of their choice, whether strategy, boosting developer productivity, identifying meaningful use cases, etc. plus top-priority access in individual AI chats.

Also buy an item in AI realist shop and get promoted to paid subscription for a month for each purchased item:

https://airealist.myshopify.com/