Why So Many AI Projects Fail

How AI Slop Became Our AI Strategy

Listen to the summary of the article above!

How often have we heard from consultants and so called “AI experts”, read on LinkedIn posts, or seen on corporate slides that to get return on investment from AI we need to do the following:

Automate repetitive tasks

Make data driven decisions

Use chatbots/AI agents/digital assistants for customer support

Speed up innovation and R&D by ideation with LLMs

Hyper-personalise customer experience

I have a feeling that I have seen this list at least 20 times. So why did MIT study has shown that 95% of companies fail to get return on investment?

Here is the first AI realist movie about our guy Mike who was forced to deal with AI transforming his job.

Disclaimer: All visuals in this article were generated using MiniMax services. MiniMax kindly provided complimentary credits for AI Realist. There was no payment, no sponsorship, no request for promotion, and no request to include this disclaimer.

This list keeps repeating itself everywhere and yet it rarely delivers ROI. Most of the analytics that one reads have a lot of “could“, “will“, “might“ as in

Overall, J.P. Morgan Research estimates generative AI could increase global GDP by $7–10 trillion, or by as much as 10%.

But as soon as we start using past tense:

JPMorgan Chase economists didn’t find a strong link between AI use and industry productivity. More recently, they found that outside of tech industries, there was no apparent relationship between AI use and slower employment growth.

Also the AI adoption is not as stellar as expected:

The Budget Lab analysis found that in the three months ended November 2022, the share of U.S. workers who were in occupations that are highly exposed to ChatGPT was around 18.2%. In the three months ended in August of this year, that share was close to unchanged at 18.3%.

If the recipe is so clear and shows up on every slide, automate repetitive tasks, build chatbots and wait for the AI revolution, then why are the results so disappointing?

Become a paid subscriber or visit the shop (each item = one month subscription)

AI realist also offers consulting, trainings and advisory:

Paid subscribers get:

Priority answers to your messages within 48-hours

Access to deep dives on the latest state-of-the-art in AI

Free participation in online training “How to build a website with AI“

Founding members receive even more:

A 45-minute one-on-one call with me

High-priority personal chat where I quickly reply to your questions within 24-hour

Support independent research and AI opinions that don’t follow to hype

Contrarian to AI slop

The answer is simple: if you ask major LLMs how to get ROI from AI, you will get exactly this list.

That is what you see on all those slides. The output of stochastic parrots.

Prompt: How can a company get ROI from AI? - Give five concise bullet points

I call it a mini model collapse. AI produces text, that text gets reused by “AI experts”, their content gets added back into the next LLMs, and all models keep repeating the same old advice that never works.

I honestly do not understand why companies keep paying consultants and “AI” trainers with random degrees in economics or marketing to repeat what any LLM can generate in seconds.

When I listen to another AI guru with a prompt engineering certificate, I enjoy guessing which chatGPT prompt they used to produce their slides.

And here is the funny part. Since anyone can prompt a model and get a reply that sounds smart but is actually useless, we end up with countless people repeating the same lines. The irony is that by disagreeing with them, you become a contrarian to them and inevitably to the AI slop. And in this case the contrarian position is simply refusing to accept AI slop.

The Contrarian Guide to AI ROI

I have seen many AI strategies, use cases, PoCs, and the misery of users arguing with hallucinating bots or cursing at poor automation of repetitive tasks. I promise you, every company, every inexperienced AI leader, every data scientist who turned into the in house AI guru because that was the closest they had, does exactly this: automate repetitive tasks, chase productivity through a list of use cases, and tick off the bullets from the slide. It is sad.

So let me tell you where I saw success, where this strategy fails, and why.

Repetitive Tasks Are Not Low Hanging Fruit

It sounds logical that repetitive tasks are the ones to automate and should be easy to handle. Unfortunately, it is not that simple.

It is not about how often a person performs a task. It is about the workflow around it. Imagine Mike, who has worked in a company for 20 years and manages access rights to different parts of the building by configuring corporate cards. Every day he gets a list of people who changed departments, left the company, got promoted, or requested new access.

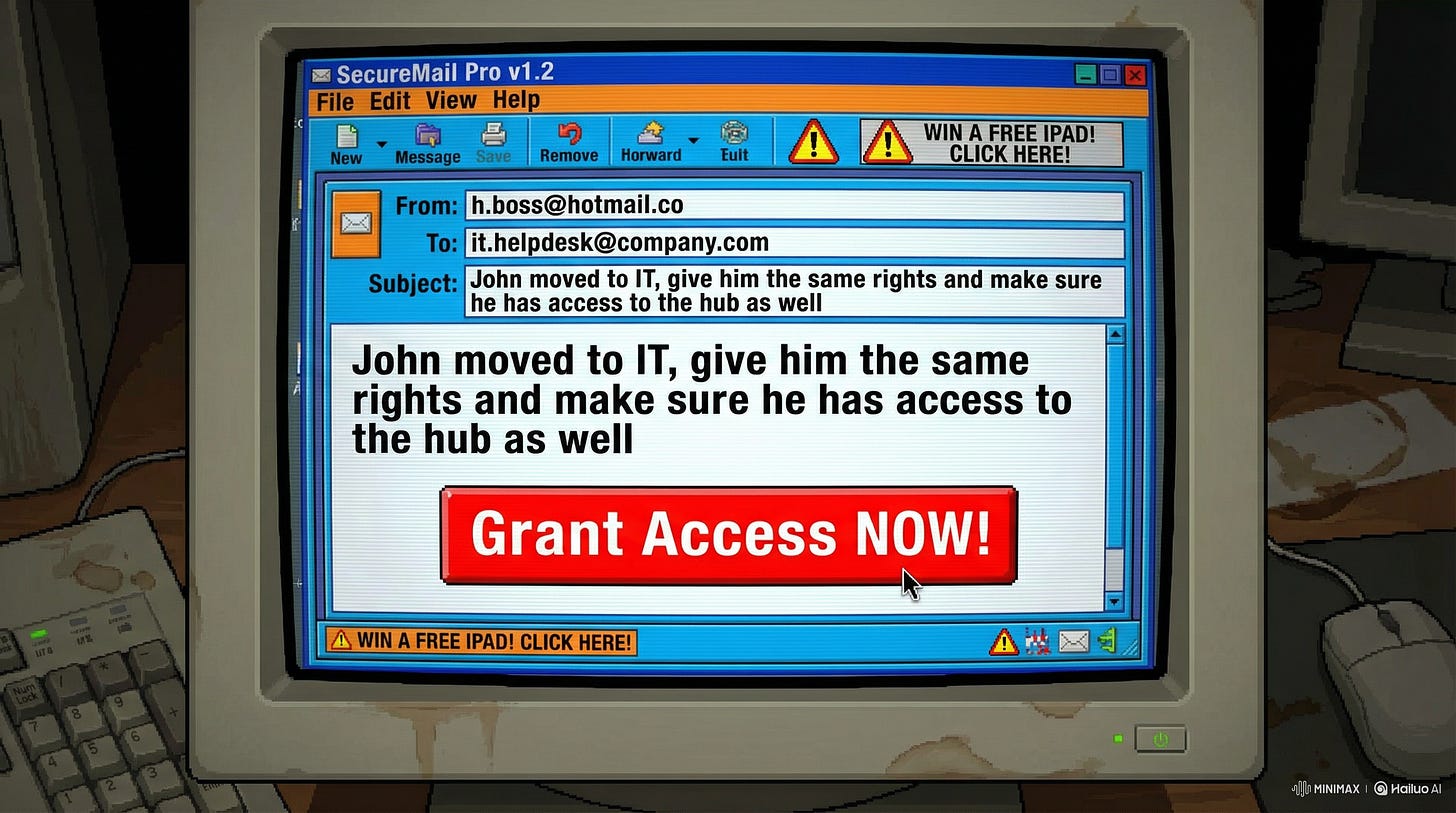

The system is also 20 years old, with an ancient interface and a clunky form. It is proprietary software the company bought together with the card system. Mike knows the buildings and departments by heart. He knows which roles to assign, where to click, and what a person means when they write something like

“John moved to IT, give him the same rights as James and make sure he has access to the hub as well“.

Yes, the request arrives by email. He understands it because James moved to IT the day before, and the hub is located in building 1. He opens the system, clicks through, updates his Excel sheet, records the change, and moves on.

Now an AI guru decides this is a perfect repetitive task to automate.